(BEST PAPER AWARD) ViLA: Leveraging General-Purpose Audio for Training Vibration-Based Stadium Crowd Monitoring Models

Yen Cheng Chang, Jesse Codling, Yiwen Dong, and 3 more authors

In Proceedings of the 12th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, Colorado School of Mines, Golden, CO, USA, 2025

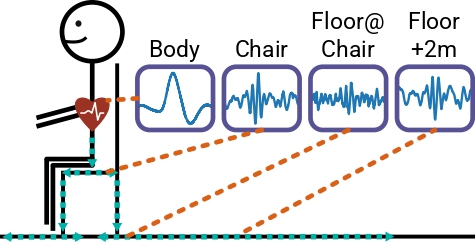

Crowd monitoring in sports stadiums is important to enhance public safety and improve audience experience. Existing approaches mainly rely on manual observation, cameras, and microphones, which can be disruptive and often raise privacy issues. Recently, floor vibration sensing has emerged as a less disruptive and more non-intrusive method for crowd monitoring in sports stadiums. However, because vibration-based crowd monitoring is newly developed, open-source datasets are lacking, making it challenging to develop data-driven models.In this paper, we introduce Vibration Leverages Audio (ViLA), a vibration-based crowd monitoring method that reduces the reliance on labeled data by pre-training with unlabeled cross-modality data. Specifically, ViLA is first pre-trained on general-purpose audio data in an unsupervised manner, and then fine-tuned with a limited amount of labeled vibration data in sensing domains. Through this approach, ViLA learns general spectral pattern representations from audio, then adapts this knowledge to vibrations. By leveraging general-purpose audio datasets, ViLA reduces the reliance on large quantities of domain-specific vibration data. This is particularly important in sensing environments characterized by high data variance and limited sensing durations, such as sports games. Our real-world experiments demonstrate that pre-training the vibration model using publicly available audio data (YouTube clips) achieved up to a 5.8X error reduction compared to the model without audio pre-training.

(BEST PAPER AWARD) ViLA: Leveraging General-Purpose Audio for Training Vibration-Based Stadium Crowd Monitoring ModelsIn Proceedings of the 12th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, Colorado School of Mines, Golden, CO, USA, 2025

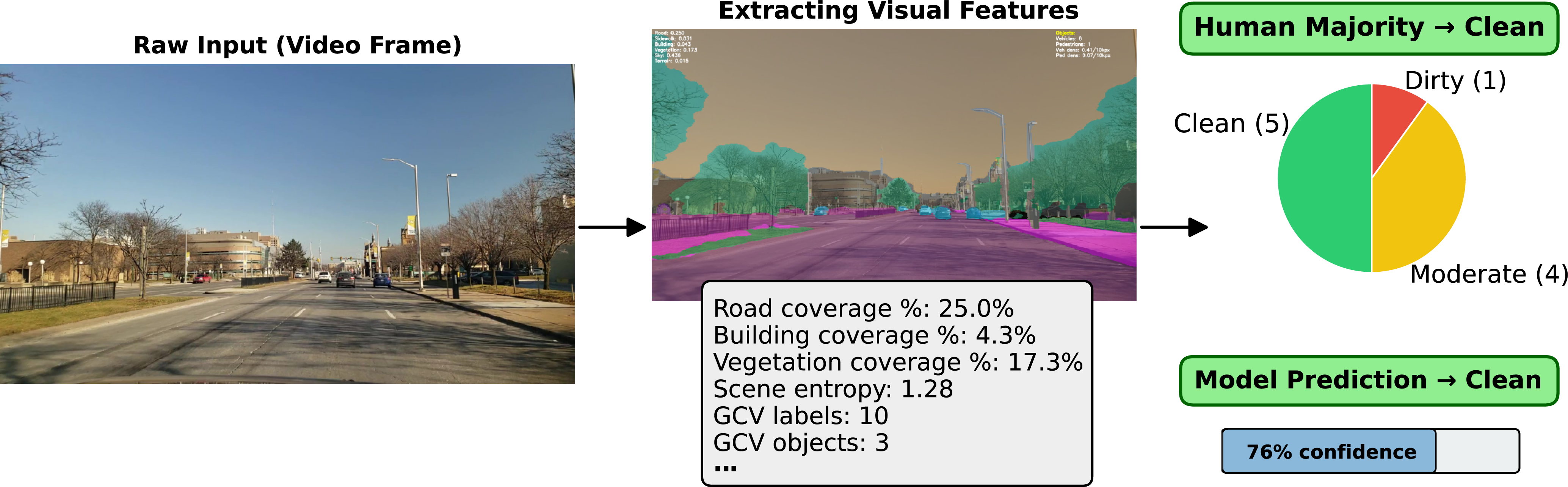

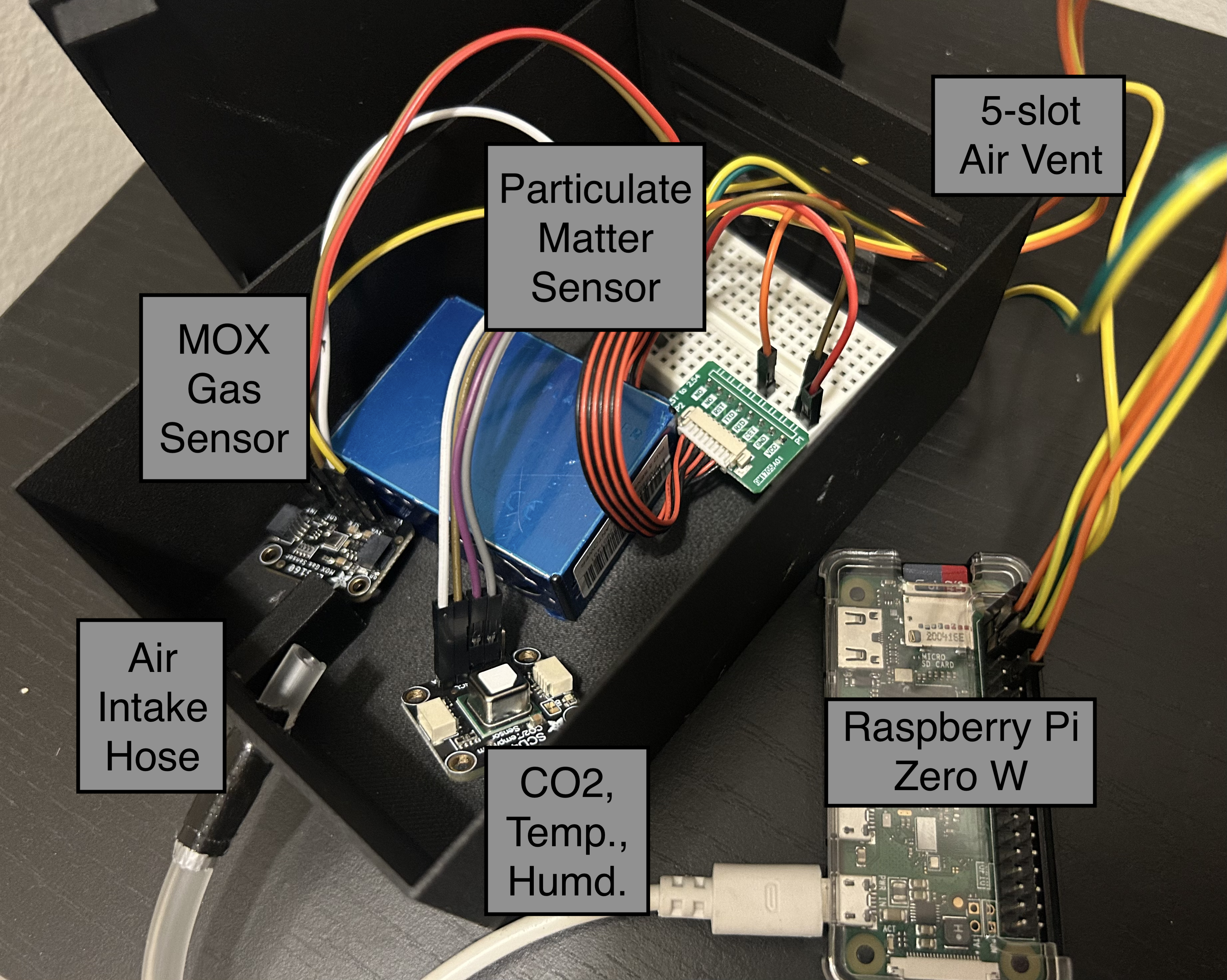

(BEST PAPER AWARD) ViLA: Leveraging General-Purpose Audio for Training Vibration-Based Stadium Crowd Monitoring ModelsIn Proceedings of the 12th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, Colorado School of Mines, Golden, CO, USA, 2025 BEST POSTER AWARD Poster Abstract: Sniffing Out the City - Vehicular Multimodal Sensing for Environmental and Infrastructure Analysis2025

BEST POSTER AWARD Poster Abstract: Sniffing Out the City - Vehicular Multimodal Sensing for Environmental and Infrastructure Analysis2025